Introducing Verkada's New Motion Search Experience With Trajectory Analysis

In our ongoing mission to enhance customer experience and facilitate more efficient access to security footage, we've updated our motion search feature.

Our enhanced motion search feature takes advantage of new state-of-the-art detection models and tracking algorithms, which dramatically outperform traditional approaches to motion search. Furthermore, we also leverage new image processing chips that provide 100x greater compute power than our previous CPU-based implementations. With these new algorithms and chips, our motion detection now runs 10 times per second, making it even easier to detect an object's motion and trajectory.

Here's why this matters: These improvements enable even more accurate and robust detection of people at distances. People can now be recognized at 125 feet without optical zoom-in; our previous algorithms were limited to 25 feet for the same level of precision and recall.

Enhancing Motion Detection

New computer vision (CV) detection models, like the YoloX series, unlock even greater accuracy and reliability in identifying people and vehicles. These models have been trained on extensive datasets that encompass diverse environmental conditions, ranges of occlusion and reflection scenarios. Leveraging these model advancements, Verkada's system performance now surpasses all of our previous benchmarks — to the tune of 50% more Hyperzoom detections for each camera.

With these advances in accuracy also come tradeoffs. Highly accurate detection models detect a higher volume of objects, which means there can sometimes be an overwhelming volume of duplicate detections (e.g., 100 detections of the same person in a 10-second period) that make it harder to filter through search results.

To address this duplication dilemma and make the user experience as seamless as possible, Verkada's motion search feature incorporates state-of-the-art tracking algorithms to effectively "de-dupe" (deduplication). We built these advanced algorithms with techniques (e.g., data association, Kalman filters and ByteTrack) to intelligently link and consolidate detections over time. That way, only relevant and unique objects appear in search results.

Accessing More In-Depth Insights

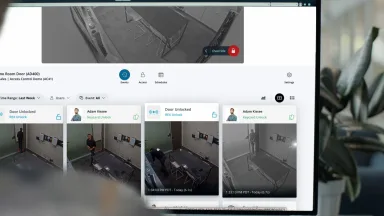

Our advanced algorithms do more than just consolidate duplicate detections of objects; they can also generate objects' movement trajectories. Building on this, we introduced a new feature that allows our customers to view the trajectories of detected individuals over time.

This continuous monitoring — displayed seamlessly in our UX as a trajectory through a scene — enables security personnel to efficiently navigate and analyze search results, saving valuable time and facilitating prompt (and correct) decision-making. The trajectory view ensures users can glean actionable insights without being overwhelmed by redundant information.

Boosting Real-Time Responses

Last but not least is the improvement to the foundation of our CV capabilities: computational speeds. All of our computations are performed directly on the camera chip, enabling real-time analysis and decision-making.

Our newest chips process and analyze video footage at a speed of 10 frames per second (FPS), providing security personnel with instant and actionable results. This high-speed processing capability significantly enhances situational awareness and response times, empowering customers to effectively monitor and address potential security threats.

The Impact and Future Possibilities of Verkada's Enhanced Motion Search

Verkada's revamped motion search feature represents a significant advancement in video analytics. By combining advanced computer vision detection models, state-of-the-art tracking algorithms, and fast computational speeds, Verkada's engineers have made it easier for customers to detect and respond to threats. We expect to uncover even more highly beneficial use cases as our customers continue to test and leverage it in all types of settings.