Introducing Verkada’s AI-Powered Search: Setting a New Standard for Conducting Investigations

We’re excited to announce Verkada’s new AI-powered search–a feature within our Command platform that allows our customers to use freeform text to search for people and vehicles in a highly-detailed manner. AI-powered search represents the culmination of years of investing in our hybrid cloud-based search capabilities and computer vision analytics. What began as basic motion search, not a timeline or scrubber, as the default view in camera footage evolved to include searching on specific attributes of people and vehicles, like clothing color or vehicle type. We recently added certain advanced analytics, including facial recognition and trajectory mapping. Now, with AI-powered search, customers are no longer restricted to a predefined list of search attributes of people and vehicles; rather, users can enter freeform text searches (“queries”) directly into the search bar to see relevant results. These advancements have collectively made our search capabilities some of the most powerful in the industry.

Beyond common characteristics such as the presence of a backpack or the color of a car, many of our customers need the ability to search for people and vehicles across a much wider range of descriptive features. This capability is important for security and physical operations professionals, as it enables them to search through their footage quickly with a much more expansive set of descriptors than before. It also addresses operational issues across a variety of industries–from retailers looking to identify shoplifters or manufacturing customers looking to support workplace safety (e.g., “person driving a forklift”).

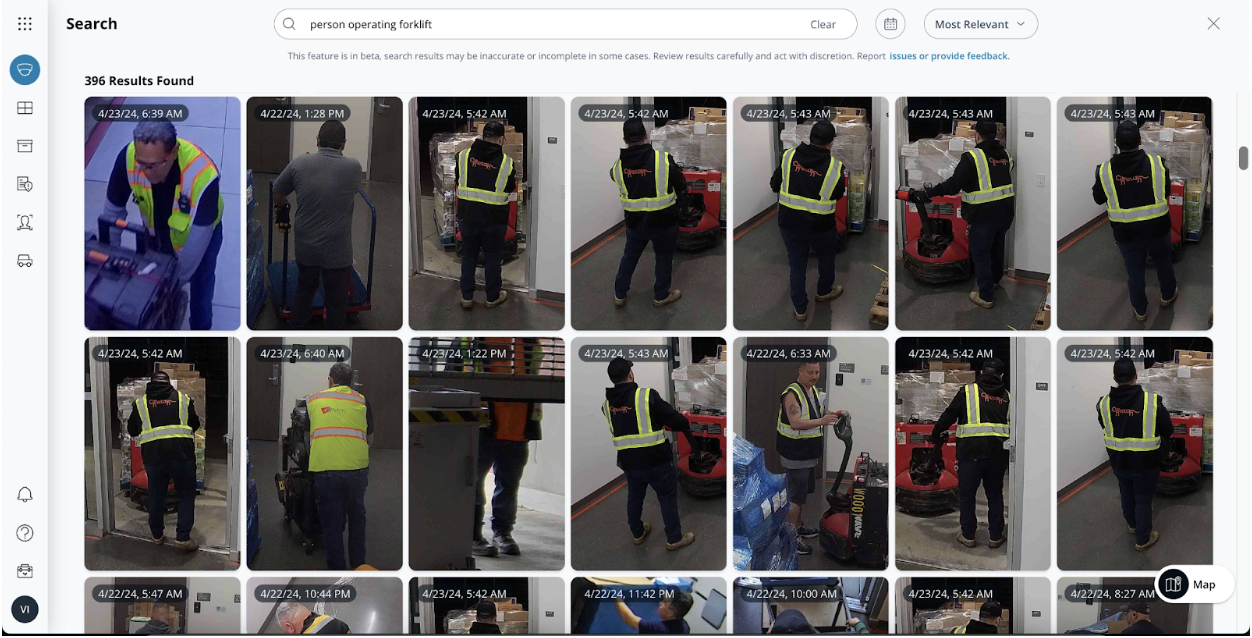

Query results for "person operating forklift"

What’s behind our AI-powered search?

When developing this feature, we employed a two-pronged approach. First, we leveraged a publicly-available model with large language and large vision functionalities. This model enabled direct comparisons between text and images by training a multi-billion parameter neural network to bring related images and texts closer together while pushing unrelated ones apart. The foundation model also provided the basis for image classification and retrieval, allowing users to search for images using natural language. Our approach further builds upon the foundation model in several important ways. We have, for instance, built an in-house model that processes customer video data before the search query is performed (in advance in a cache) to enable faster queries in real time, (e.g., no need to run the customer’s video footage through the foundation model each time a query is run). This allows our AI-powered search to index and retrieve relevant footage for our customers quickly and at scale.

See here for more information about our foundation model and how we’ve improved upon it.

Industry-Specific Use Cases

While AI-powered search opens a near-limitless number of attribute searches for people and vehicles generally, this feature can also be used to tackle industry-specific use cases. In our user guide we provide an extensive list of specific queries organized by industry that can be helpful for conducting investigations relevant to your organization. Below we outline how a few industries can directly benefit from AI-powered search:

Retail - Anti-Shoplifting

Our retail customers can use AI-powered search, for example, to find specific objects on a person or vehicle. Our AI-powered search capability can detect text, brands and logos, so retail customers can identify specific objects or apparel using a high level of descriptive detail. Customers can structure queries like, “person holding Louis Vuitton bag,” or “person holding red vase near front door” to better identify shoplifting suspects.

Manufacturing & Logistics - Workplace and Occupational Safety

Our manufacturing customers can use AI-powered search to better monitor their premises to enforce safety measures and reduce workplace injuries. These manufacturing customers can query “person wearing helmet,” or “person wearing safety vest” near a specific machine or corridor to confirm that proper precautions are taken in required areas. These customers can also query for a “person operating a forklift” or for specific words on the side of a truck to monitor their logistics

Healthcare - Safety and Health Compliance

Our healthcare customers can use AI-powered search to better monitor their hospitals and clinics and help their patients, doctors and other staff stay safe. These healthcare customers can query “person wearing medical mask,” or “person washing hands” to enforce medical safety policies, “person holding baby” for enhanced NICU monitoring, or “San Mateo ambulance” to better track their ambulance fleets.

How do we seek to prevent misuse of this feature and minimize inappropriate results?

Moderation is a technique used in natural language-based search that bars inappropriate results from certain queries. We have built query moderation into our platform to help reduce the risk that our AI-powered search is used maliciously or in ways that may be harmful. At the same time, we have sought to implement moderation in a way that isn’t overly restrictive so as not to compromise the feature’s usability–striking the balance between respectful and useful searches is paramount for us.

Unlike our filter-based attribute search, which has a predefined set of descriptors for narrowing search results, our AI-powered search lets customers search their footage for a wide range of attributes using their own words. Because our foundation model has been trained on publicly available data from the Internet, results may still be inaccurate, inappropriate or offensive. Moderation is a critical safeguard for this powerful feature.

At the same time, we implemented moderation in a way that isn’t overly restrictive to the point that it compromises the feature’s usability. We also leverage industry recognized practices, including open source data and OpenAI’s moderation APIs, to make moderation more effective.

You can read more about our moderation techniques in the white paper here.